Experts play pervasive and multifarious roles in shaping the international order. A sophisticated body of literature within international relations explores how experts shape governmental and international policy—including their work in framing problems, gathering and interpreting ambiguous evidence, and proposing policy solutions.[1] Separately, scholars whose work is informed by the field of science and technology studies have examined how experts’ technological work can enact international order more directly.[2] The essays in this forum focus on this latter mode of influence, showing how experts participate in politics by other means—specifically the making and breaking of the security of networked information systems.

H-Diplo | Robert Jervis International Security Studies Forum

Policy Roundtable III-4

The Practices and Politics of Cybersecurity Expertise

29 March 2024 |PDF: https://issforum.org/to/jprIII-4 | Website: rjissf.org | Twitter: @HDiplo

Editor: Diane Labrosse

Commissioning Editors: Rebecca Slayton and Lilly Muller

Production Editor: Christopher Ball

Pre-Production Copy Editor: Bethany Keenan

Contents

Introduction by Rebecca Slayton, Cornell University, and Lilly Muller, King’s College London. 2

“Varieties of Cybersecurity Expertise” By Jon R. Lindsay, Georgia Institute of Technology. 61

“De-essentializing Cybersecurity Expertise” by Aaron Gluck-Thaler, Harvard University. 63

Introduction by Rebecca Slayton, Cornell University, and Lilly Muller, King’s College London*

Experts play pervasive and multifarious roles in shaping the international order. A sophisticated body of literature within international relations explores how experts shape governmental and international policy—including their work in framing problems, gathering and interpreting ambiguous evidence, and proposing policy solutions.[1] Separately, scholars whose work is informed by the field of science and technology studies have examined how experts’ technological work can enact international order more directly.[2] The essays in this forum focus on this latter mode of influence, showing how experts participate in politics by other means—specifically the making and breaking of the security of networked information systems.

By examining these practices, this forum calls attention to several key questions. What does cybersecurity mean to distinctive polities, and how do these meanings change over time? How do these different conceptions of cybersecurity shape what constitutes legitimate and authoritative expert practice? And to what extent can expert practices not only enact, but actively transform power relations? The seven research essays in this forum demonstrate substantial variation in how these questions are answered, and two concluding essays provide reflections on the significance of this variation for scholarship and policy.

This introductory essay frames this variation through a relational conception of expertise. We draw on scholarship which analyzes expertise not primarily as the possession of skills or knowledge, but rather as the enactment of relationships between experts, non-experts, and culturally valuable objects of expertise.[3] These relationships are enacted in ways that vary with time and place.

The first two essays in this forum analyze examples of how hackers attempt to use their skills to challenge dominant political structures.

Max Smeets analyzes the Cyber Partisans, a Belarusian hacking group that opposes the authoritarian government of Belarusian President Alexander Lukashenko. Smeets argues that the Cyber Partisans should not be understood as a proxy for state action, but rather as a social movement. He further suggests that integrating insights from social-movements theory into analyses of hacking may be a productive avenue for international relations scholars. Such work might build productively on existing analyses of the politics of hacking.[4]

Matt Goerzen’s essay examines the rise of an “anti-security” meme in the late 1990s underground. He argues that this meme was a response to the rise of a cybersecurity industry that threatened to co-opt members of the underground and eliminate the vulnerabilities that they wished to exploit. While there was no unified social movement, the tactics embraced under the banner of anti-security have resurfaced in prominent hacks on the surveillance industry in recent years—attacks which undermine both the technical and political viability of the corporations that support autocratic political regimes.

As Goerzen notes, hackers’ participation in the underground sometimes served as a source of technical authority that enabled them to earn lucrative paychecks in the mainstream security industry. The alignment of hackers and corporations might seem to be another example of how hackers have worked the system to their advantage. Or, it might be interpreted as the co-option of hackers’ expertise by the very establishment that they once opposed. Either way, however, these realignments came with new risks to the interests of both hackers and corporations.

In previous work, Ryan Ellis and Yuan Stevens show that bug bounty programs, which offer hackers financial rewards for information about vulnerabilities that can be exploited, cultivate precarity for hackers by turning them into gig workers.[5] In this forum, Ryan Ellis goes further by revealing the risks that such program pose for the mainstream security industry. Contrary to the many scholars who portray bug bounty programs as a means of correcting market failures that lead to poor security, Ellis shows that bug bounty programs can also serve as targets for exploitation by malicious actors, thereby creating new risks.

Ellis’s essay demonstrates the risks that are associated with embedding expert knowledge and practice in the digital infrastructure. Andrew Dwyer further explores these risks, showing how globally-interconnected infrastructures for malware analysis and detection have shaped the possibilities for espionage and subversion. Thus, for example, the malware detection infrastructure of the Moscow-based endpoint detection vendor Kaspersky enabled it to download the US National Security Agency’s tools—whether accidentally, as claimed by Kaspersky, or in the service of the Russian government, as claimed by the United States and its allies. Dwyer emphasizes that analysts’ knowledge becomes embodied in malware detection networks, and trust in those networks creates expertise. By banning Kaspersky from US governmental networks, the US government effectively affirms its confidence in the technical authority of its analysts, but not in their moral authority.

In their article, Rebecca Slayton and Clare Stevens shift the focus from malware detection infrastructure to national critical infrastructure, which comprises the systems that are essential to the everyday safety and security of nation-states. They examine how experts construct and reconfigure boundaries around their authority and expertise, focusing in particular on the oft-cited boundary between security in information technologies (IT) and operational technology (OT). They argue that developing a bottom-up understanding of how experts alternately produce, maintain, navigate, and transcend boundaries between such fields can improve the development of policies to manage boundary-spanning risks.

Jesse Sowell’s essay examines the politics of maintaining reliable infrastructure, by showing how internet operators use “rough consensus” to establish credibility and authority within their technical community. While internet-operating organizations ground their authority in this technical and ostensibly apolitical mode of decisionmaking, Sowell describes their work as a kind of “low politics” that shapes everyday communications infrastructures. For example, Sowell opens with the example of the refusal of the European network operator organization to accommodate Ukraine’s request to cut Russia off from the internet in the wake of the brutal Russian invasion of Ukraine.

In his essay, James Shires shifts the focus from maintaining digital connectivity, to efforts to exclude individuals from digital networks. In particular, he shows how the enactment of cybersecurity expertise in carceral conditions differs from its enactment in everyday commerce and government operations. Mainstream studies of cybersecurity emphasize the tradeoffs between usability and security: organizations want technologies that are easy to use, but only in authorized ways and by authorized individuals. By contrast, prisons and other carceral contexts aim to prohibit use and enforce digital exclusion for incarcerated individuals. Shires notes that expert knowledge and practice are necessary both for circumventing and enforcing such exclusion.

Two concluding essays synthesize some broader lessons from this forum. First, Jon Lindsay notes that these essays help us move beyond two common but contradictory narratives about cybersecurity expertise. One is that cybersecurity expertise is scarce, something held only by a few technical geniuses. The other is that cybersecurity expertise is widely available, as it is relatively easy to launch highly damaging cyberattacks. These essays complicate the first narrative by showing that cybersecurity expertise is diverse and distributed across social organizations, rather than something possessed by relatively rare hacker-geniuses, and they complicate the second by revealing the substantial labor needed to either maintain or compromise the security of complex socio-technical systems.

Aaron Gluck-Thaler concludes with a reflection on how and why some expert practices and institutions have become dominant, while others have been marginalized. As he notes, “the priorities of states and corporations do disproportionately shape the possible forms that cybersecurity expertise can take,” as evidenced by the alliance of cybersecurity and national surveillance industries, and the marginalization and cooptation of the hacker underground. He argues for additional research that examines how expert practices are not only shaped by the interests of states, corporations, and civil society, but also help to co-produce them.

This observation brings us back to the relational conception of expertise. The expert authority of the individuals and communities which are discussed in this forum ultimately derives from their ability to persuade other powerful actors that they possess specialized knowledge and skills—whether through theatrical technical disruptions, the reliable provision of infrastructure, the enforcement of digital exclusion, or the identification of new exploits and the construction of means for preventing those exploits. It is through these relational practices that experts gain not only credibility with policymakers but also the ability to pursue politics by technical means. We hope that these essays will encourage other scholars to examine the daily work of technical experts as a locus of power in international politics.

Contributors:

Rebecca Slayton is Associate Professor in the Department of Science & Technology Studies and the Judith Reppy Institute for Peace and Conflict Studies, both at Cornell University. Her research examines how new fields of expertise become institutionalized and gain authority in the contexts of international security and cooperation. Her first book, Arguments that Count: Physics, Computing, and Missile Defense, 1949–2012 (MIT Press, 2013), shows how the rise of a new field of expertise in computing reshaped public policies and perceptions about the risks of missile defense in the United States. In 2015, Arguments that Count won the Computer History Museum Prize. Slayton’s current book project, Shadowing Cybersecurity, examines how expert knowledge and practice in cybersecurity have been shaped by conflicting notions of security, as well as the irreducible uncertainties associated with intelligent adversaries.

Lilly Pijnenburg Muller is a Research Associate in the War Studies Department at King’s College London. She holds a non-resident fellowship at the Tech Policy Institute at Cornell University and the Norwegian Institute of International Affairs (NUPI). She is an interdisciplinary researcher in Critical Security Studies and Science and Technology Studies (STS) with interests in technology, the politics of (in)security, and power. During her Fulbright postdoctoral Fellowship in the Science and Technology Studies department at Cornell University (2022–2023), she co-edited this H-Diplo|RJISSF forum on cybersecurity expertise. Prior to joining Cornell, Lilly held research positions at the Oxford Martin School at the University of Oxford and the Norwegian Institute of International Affairs (NUPI). She received a PhD in War Studies from King’s College London.

Andrew Dwyer is a Lecturer in Information Security at Royal Holloway, University of London. His interests lie at the intersection of understanding decisionmaking as it is mediated through computation, the role of cyber operations and capabilities, as well as “critical” approaches to the study of cybersecurity. Beyond Royal Holloway, he is the lead of the UK Offensive Cyber Working Group and has previously held research positions at Bristol and Durham universities after completing his DPhil at the University of Oxford in 2019.

Ryan Ellis is an Associate Professor of Communication Studies at Northeastern University. Ryan’s research and teaching focuses on topics related to communication law and policy, infrastructure politics, and cybersecurity. He is the author of Letters, Power Lines, and Other Dangerous Things: The Politics of Infrastructure Security (MIT Press, 2020) and the editor (with Vivek Mohan) of Rewired: Cybersecurity Governance (Wiley, 2019). Prior to joining Northeastern, Ryan held fellowships at the Harvard Kennedy School’s Belfer Center for Science and International Affairs and at Stanford University’s Center for International Security and Cooperation (CISAC). He received a PhD in Communication from the University of California, San Diego. He is currently working on a book about hackers, precarious work, and bugs for MIT Press.

Aaron Gluck-Thaler is a PhD candidate in the Department of the History of Science at Harvard University, and an affiliate of the Berkman Klein Center for Internet & Society. Aaron studies the history of surveillance and its relationship to scientific practice. He is the 2023–2024 IEEE Life Members’ Fellow in the History of Electrical and Computing Technology.

Matt Goerzen is a student in the History of Science department at Harvard University. This work compiles some loose research from the report “Wearing Many Hats,” co-authored with Gabriella Coleman and published by Data & Society Research Institute with their financial support. Goerzen is interested in the history of computer security, with a particular emphasis on alternative historical imaginaries of computer security.

Jon R. Lindsay is an Associate Professor at the School of Cybersecurity and Privacy and the Sam Nunn School of International Affairs at the Georgia Institute of Technology. He is the author of Information Technology and Military Affairs (Cornell, 2020) and coauthor of Elements of Deterrence: Strategy, Technology, and Complexity in Global Politics (Oxford, 2024). His latest book project is Age of Deception: Cybersecurity and Secret Statecraft.

James Shires is a Senior Research Fellow in Cyber Policy at Chatham House. He is a co-founder and trustee of the European Cyber Conflict Research Initiative (ECCRI), and a non-resident associate fellow with The Hague Program for International Cybersecurity. He speaks regularly and has published extensively on cybersecurity and global politics, including The Politics of Cybersecurity in the Middle East (Hurst/Oxford University Press, 2021).

Max Smeets is a Senior Researcher at the Center for Security Studies (CSS) at ETH Zurich, and Director of the European Cyber Conflict Research Initiative. He is the author of No Shortcuts: Why States Struggle to Develop a Military Cyber-Force (Oxford University Press & Hurst, 2022) and co-editor of Deter, Disrupt or Deceive? Assessing Cyber Conflict as an Intelligence Contest (Georgetown University Press, 2023), with Robert Chesney. He has published widely on cyber statecraft, strategy, and risk.

Jesse Sowell, PhD, is a Lecturer in Internet Governance and Policy at University College London’s Department of Science, Technology, Engineering, and Public Policy (STEaPP), focusing on the operational institutions that ensure the Internet stays glued together in a secure and stable way. His work focuses on how governance and authority is constructed within these communities and institutions, how that authority differs from authority in conventional state-based institutions, and how to develop institutional and policy interfaces that can help bridge the gaps between the two. Prior to joining UCL, Jesse held positions as an Assistant Professor of International Affairs at Texas A&M University and as a Postdoctoral Cyberscurity Fellow at Stanford. Jesse holds a PhD in Technology, Management, and Policy from MIT.

Clare Stevens is a Teaching Fellow in International Security at the University of Portsmouth. Her research has looked at the controversies, politics and boundary work of defining “cybersecurity,” including what it can teach us more broadly about security, secrecy and technologies in contemporary international security. She has recently co-authored a piece in International Political Sociology entitled “What Can a Critical Cybersecurity Do?” and an article piece on the contested politics of private cybersecurity expertise in Contemporary Security Policy, entitled “Assembling Cybersecurity.”

“Collective Resistance in the Digital Domain: The Cyber Partisans as an Exemplar”

by Max Smeets, ETH Zurich, Center for Security Studies

The Cyber Partisans, a Belarusian hacking group formed in September 2020, has claimed responsibility for several high-profile cyber operations, including an attack against the Belarusian railway system that reportedly halted Russian ground artillery and troop movement into Ukraine and allowed the group to access the complete database with personal information of those crossing the Belarusian borders.[6]

It might be tempting to describe the Cyber Partisans as a “cyber proxy,”[7] “mercenary,”[8] “semi-state actor” group,[9] or “intermediary.”[10] But the Cyber Partisans do not fit these labels. The Cyber Partisans do not act as an intermediary for another government’s interests, and have a history of independent operations against the government of Belarus. As it is a small group of closely linked individuals with a strong connection to Belarus, the Cyber Partisans also differ from other non-governmental “hacktivist” efforts, such as Anonymous.[11]

Instead, the Cyber Partisans are more akin to a digital resistance movement—a concept that is not yet well-described in the literature on cyber politics. A resistance movement is commonly defined as “an organized effort by some portion of the civil population of a country to resist the legally established government or an occupying power and to disrupt civil order and stability.”[12] The Cyber Partisans are specifically organized to resist—and ultimately overthrow—the Lukashenko regime in Belarus through the use of digital means. The Cyber Partisans’ use of digital violence is what Michael Lipsky calls a “strategically deployed resource”—and not, for instance, a spontaneous eruption of hacktivist rage.[13] To this end, the group also works together with other resistance organizations that do apply kinetic force. We have not seen a violent digital resistance movement like this to date.

The activities of the Cyber Partisans highlight the need to broaden our theoretical perspectives in the study of non-state actors in cyberspace, beyond principle-agent models, mercantile analogies, or institutional design theories on delegation and orchestration. Cyber scholars should engage more with research on social movements, resource mobilization, and collective resistance.

Formation

The Cyber Partisans were formed in September 2020, following the elections in Belarus and the ensuing protests and brutal, repressive crackdown by the Lukashenko regime earlier in the year.[14] The group is one of three arms of the collective Suprativ, a larger resistance movement opposing the government.[15] The Suprativ movement includes two other groups: the Flying Storks, an activist network executing also kinetic operations;[16] and the PSS: People’s Self-Defense Squad.[17] The latter group provides training for vigilante action against the government. This, for example, includes online videos on how to free yourself from zip ties when captured, how to make smoke bombs, or countering protest crackdown tactics by riot police.[18]

The Cyber Partisans’ membership is said to include former IT sector professionals “who learned everything on the go. They’re not hackers, none of them were hackers at any point.”[19] The group has said on several occasions that it does not receive funding or support from Western governments, and reported that “most” of its members are Belarusian citizens located in Belarus, stressing its grass-roots origins and physical presence as protesters in Belarus.[20]

Unlike most other hacktivist groups, the Cyber Partisans have designated an official spokesperson.[21] Based in New York City, Yuliana Shemetovets gives interviews to explain the rationale behind their operations and the group’s broader campaign goals.[22] She says that she does not know the identities of the Cyber Partisans but is given instructions through encrypted messaging.[23] “I don’t know who they are, and I don’t want to know,” Shemetovets says. “Even if someone gets access to my phone…they are not going to find anything that can reveal any sensitive information.”[24]

Numerous hacker groups have falsely claimed they were not affiliated to a government. Thus, we must ask: are the Cyber Partisans truly independent and not operating on behalf of a state? There is no definitive evidence that the hacking collective is independent of state sponsorship. However, as Juan Andres Guerrero-Saade points out, the way in which the Cyber Partisans operate suggests they are an independent effort: “Most importantly, their limitations and tasking appear organic. They claim that in order to discover important government targets, they collaborate with a union of current and former Belarusian security officers (BYPOL) better acquainted with the inner workings of the government.”[25]

This suggests that the Cyber Partisans is not a large, loosely connected group of international hackers, but rather a small, trusted group of individuals with a strong connection to Belarus. Current membership is said to be around 30 members.[26] According to the spokesperson of the group, four individuals are responsible for “ethical hacking” while the others provide support, analysis, and data processing.

The Cyber Activity of the Cyber Partisans

The Cyber Partisans have conducted a wide set of operations against the Belarusian regime since September 2020. They maintain a list of operations on the website of the Suprativ collective.[27] Early operational activity of the Cyber Partisans included Distributed Denial of Service (DDoS) attacks against government websites.[28] They also reportedly added Belarusian president Aleksandr Lukashenko’s name to the Ministry of Internal Affairs’ “Most Wanted” list.[29] The group later expanded their and disruptive as well as doxing activities, which involve the act of publicly releasing personal information.

The two largest sets of coordinated activities conducted by the Cyber Partisans are Operation Scorching Heat and Operation Inferno.[30] As part of Scorching Heat, the Cyber Partisans released the passport details of millions of Belarusians, obtained by hacking the government “Passport System” and traffic police database. According to the group, “The database contains all people who have a passport, residence permit or similar documents. We can’t say for sure, because sometimes a person has several documents. But in a separate sample, we saw more than 11 million personal numbers.”[31] Scorching Heat also released other government databases, including the 102 ambulance emergency call logs, the database of violations of the Department of Internal Security of the Interior Ministry, the video database of the Interior Ministry drones, and the video surveillance system of the detention center, among others.[32]

The second campaign, Inferno, ran from November to December 2021. It included three operations against organizations with ties to the Lukashenko regime. The first operation encrypted the workstations, databases, and servers of the Belarusian Academy of Public Administration. The second operation targeted Belaruskali, one of the largest state-owned companies producing potash fertilizers, and the third operation was aimed at the Mogilevtransmash, one of the largest vehicle manufacturing company in Belarus.[33]

The hack that has gained the most international attention, however, was not part of these two campaigns. In that attack, which targeted the Belarusian railway, the Cyber Partisans claimed to have put the train traffic control systems in the Belarusian cities of Minsk and Orsha into a “manual control” mode that would “significantly slow down the movement of trains” without creating “emergency situations.”[34] The spokesperson explained that the attack aimed “to indirectly slow down Russian troops on the territory of Belarus, and show [that its] strategically most important infrastructure is overlooked by Lukashenko.” Additionally, “Belarus is at the centre of Europe and a lot of other countries are using these systems. […] It’s to show that Lukashenko is not only not safe for the people of Belarus, but also for its neighbours.”[35]

The Cyber Partisans have also provided technical support to the Belarusian resistance movement. For example, during the 2020 protests against Lukashenko, the group shared three links to proxy servers via their Telegram account to the protesters marching in the streets.[36] In addition, the group developed new tools to provide secure channels of communication. They announced an encrypted SMS application to allow protesters to communicate securely, without an internet connection, and the development of a secure Telegram application (Partisan Telegram).[37]

The Cyber Partisans have developed a victory plan, called Momentum X, which consists of two phases. The first, Moment X, involves the launch of multiple actions aimed at eliminating the regime of Lukashenko. As stated on the group’s website, “it is the beginning of an indefinite protest up to the moment of victory. The exact date will not be known until Moment X, which is set according to the necessary degree of readiness of the partisan organizations and the entire protesting community.”[38] Second, there is Phase X. This is “a period of time during which Moment X can be declared at any point. The beginning of Phase X will be announced in advance.”[39] During this phase, the group will also release their X-App to help paralyze the internal networks of the regime. It seeks to mobilize the community against “vulnerable points” of the regime through another application called the Vulnerability Points Map.[40]

Collaborations

The Cyber Partisans stress that their actions and collaborations are strictly intended to produce operative effects on Belarusian territory and infrastructure only. Thus, while the Ukrainian government has called on volunteers to join their IT Army in the fight against Russia, the Cyber Partisans do not participate in the IT Army’s activities or execute operations outside of Belarus’s borders.[41] The group is, however, willing to share best practices about the targeting of Russian forces.[42]

The Cyber Partisans owes much of its successful targeting, which is often a major issue for many other non-state groups, to its partnership with an organization of former Belarusian government officials, BYPOL. Launched in October 2020, BYPOL “unites hundreds of incumbent and former security officers looking to restore the rule of law and order in Belarus.”[43] Their stated goal is democratic rule in Belarus, entailing new presidential and parliamentary elections, led by Svetlana Tikhanovskaya, the opposition presidential candidate in 2020. “The Cyber Partisans wrote to us to help them find a way to understand all the law enforcement and intelligence agencies,” Aliaksandr Azarau, a former lieutenant colonel in Belarus’s police force now working for BYPOL says. “They wanted to know how to penetrate inside these organizations to steal information. Because we work there, we know everything inside. We consulted with them on how to do this.”[44] In exchange, BYPOL receives access to data from the Cyber Partisans to aid their investigations into the regime, which are subsequently published on BYPOL’s Telegram channel.[45]

The group also frequently collaborates on projects with other arms of the Suprativ collective, a larger resistance movement opposing the government of Belarus. They help the Flying Storks, an activist network which also executes kinetic operations, by curating the Belarus Black Map, a database and comprehensive search system of identities and physical addresses of government officials, KGB officers, and anti-riot police to assist the work of opposition organizations.[46] This database can be searched to find the group’s doxed profiles.

Furthermore, the Cyber Partisans have worked with news agencies and other journalism groups. It collaborated with CurrentTimeTV on the reporting of COVID-19 infections and deaths numbers for Belarus.[47] Bellingcat has also used information released by the Cyber Partisans for its report on Wagnergate (an attempted Ukrainian sting operation)[48] and the uncovering of a Russian spy in Italy.[49]

Difficult Choices to Be Made

The Cyber Partisans are faced with a series of choices about their modus operandi. I discuss five choices here, which relate to location, scale, communication, engagement with international politics, and targeting.

Location: To ensure members of the Cyber Partisans are free from danger or threat, the most obvious policy would be for them to live outside of Belarus or Russia. This would significantly reduce the chances of their being found and detained. At the same time, the operations of the Cyber Partisans show that there is an inherent nexus between the conventional domain and cyberspace. It greatly helps a hacking group’s operational effectiveness if people can provide physical access to the systems. For example, a hacking group can benefit from the work of insiders, who, for instance, can plug in a USB-stick to a device to spread malware. An insider might also be able to share information about the target environment—for example, telling the hacker group what type of (outdated) software is running on the workstations.

Scale: To scale up the operational efforts it would make sense for the Cyber Partisans to grow the group’s membership (assuming it is true they only have a handful of operators and about 30 members). At the same time, bringing in more hackers makes operational security more challenging and introduces other security risks, such as insider threats that could pass on confidential information.

Communication: Collective resistance requires domestic and international support. Support inherently relies on effective communication. Having a spokesperson who can be interviewed, attend conferences, and speak at roundtables helps the Cyber Partisans in a number of ways. It not only makes it easier to engage with the group, it draws out the human element—and potentially human sacrifice – of their activity. Yet, it also leads to new vulnerabilities—not least to the relatives and friends of those who do not remain anonymous.

Engagement with international politics: The 2022 further invasion of Ukraine by Russia meant that the Cyber Partisans needed to be cognizant of the international political dynamics. On the one hand, the invasion has led to a growing international attention and interest in the region, including Lukashenko’s close relationship with Russian President Vladimir Putin. This has helped the cause of the Cyber Partisans: they are part of a larger fight against the evils of authoritarianism. On the other hand, it has led the group’s actions to be often folded into the larger events of Ukraine and misrepresented—as mischaracterizations of Cyber Partisans as a Ukrainian cyber proxy exemplifies.

Targeting: Conducting cyber attacks can help raise awareness of the Cyber Partisans’ cause and existence. Yet, the more significant type of disruptive or destructive operations are hard to pull off and hardly weaken the legitimacy of the targeted institution. From this perspective, doxing can be a more appealing option. Doxing is often easier to pull off – especially if it is combined with physical access—and it turns the spotlight on the victim organization, rather than attacking entity. In the case of the Cyber Partisans, the internal corruption that is revealed through the disclosure of the documents can help to erode support for the regime in Belarus.

Any resistance movement faces difficult organizational and operational choices. A fruitful area for further research is to draw on existing work on resource mobilization and more systematically compare how digital resistance movements differ from conventional resistance movements in their (violent) tactics, and address its implications.[50] It seems to be much easier to hit targets remotely when operating in cyberspace, but harder to cause major disruptions. Furthermore, cyber operations can more easily be misattributed to other groups, especially if they use common tactics, techniques, and procedures (TTPs).

It is likely also harder to attract and train talented individuals for cyber operations.[51] Yet, it is not clear whether digital resistance movements therefore have to organize themselves differently compared to conventional resistance movements or whether this means that conventional resistance movements are thus more or less likely to start adopting digital forms of violence. Existing scholarship on the macrostructural situations of social movements potentially provides a useful starting point for future research to address these questions and analyze the conditions in which digital resistance can emerge and be sustained.[52]

“Visions of (In)Security: Anti-Security, Project Mayhem, and Unruly Expertise”

by Matt Goerzen, Harvard University

Introduction[53]

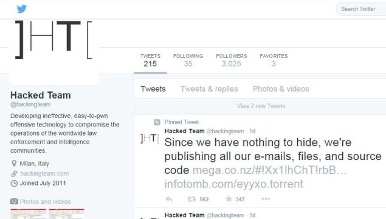

On 5 June 2015, the Twitter account of the cybersecurity company Hacking Team tweeted a surprise announcement: “Since we have nothing to hide, we’re publishing all our e-mails, files, and source code.” A link associated with the tweet provided access to virtually all of the company’s corporate data, which largely confirmed suspicions that Hacking Team was supplying hacking and spyware tools to repressive regimes in Sudan, Saudi Arabia, Bahrain, and elsewhere.[54] Furthermore, the group’s Twitter handle had changed from Hacking Team to Hacked Team. Hacking Team had been hacked.

They were not alone. Since 2011, anonymous hackers have targeted multiple companies and organizations suspected of supporting state oppression or otherwise curbing the possibility of political dissent. These hacks have often complemented the work of civil society groups who are interested in a broader notion of computer security, one aligned more with what the United Nations (UN) has called “human security”—an approach aimed at securing people’s basic rights to food and political autonomy.[55]

Yet many of these hackers promote the notion of “anti-security,” or “antisec.” What does it mean to be “anti-security?” How do these hackers envision security in relation to the companies and nations that claim to provide it?

This essay traces the emergence of anti-security to structural changes near the turn of the millennium. With the mainstreaming of the World Wide Web, the growing viability of e-commerce, and increasing government commitments to secure network-connected infrastructure, some hackers began to decry the rise of what they called the “security industry.” The specter of the security industry was invoked in Internet Relay Chat (IRC) channels, conference talks, hacker journals, and electronic textfiles—the underground’s favored method of circulating small bundles of information.[56] The “security industry” referred loosely to a growing assortment of government-sponsored research shops, auditing firms, security service and tool vendors, consultancies, and an array of boutique start-ups run by ex-military and “white hat” hackers. Some hackers became convinced that this industry was exploiting the underground, both by villainizing hackers to justify their services, and by extracting hackers’ hard-won knowledge about computer vulnerabilities. Industry activity led to the patching of vulnerabilities, shutting down the access that was prized by “black hat” hackers. Meanwhile, motivated by the prospect of legal safe harbor for research, mainstream legitimacy, and steady paychecks, white hat hackers were increasingly stepping up as this security industry’s rank-and-file workers.

Amid speculation that the underground was dying,[57] an assortment of hackers pushed back, seeking to rebuke the security industry, punish “sell-outs” and “hacker pimps,” and preserve the underground scene in an idealized state. In 2001, hackers launched the “Anti-Security Movement” as a concerted effort to denounce the full disclosure practices that had brought hackers and mainstream security figures together.[58] The following year, hackers initiated “Project Mayhem,” a self-consciously black hat initiative to hack, dox, and otherwise shame and ridicule those white hat hackers and mainstream security experts seen to be contributing to the rise of the hated security industry.[59] Along the way, a handful of other actors and chaos agents entered the fray, offering their take on the industry and its worst excesses. While many of those involved articulated alternative visions for computer security, the escalating rhetorical denigrations and performative stunts of Project Mayhem largely overshadowed those visions—at least in the short term.

This essay will sketch these developments and reflect on their lasting significance. While many hackers ultimately judged both the original Anti-Security Movement and Project Mayhem as failures, they became powerful touchstones for later generations of politically-inclined hacker activists. The concept of anti-security supported a lasting vision of unruly, anti-establishment hacking—a mode of hacker expertise that is firmly autonomous from employment contracts, training certifications, or bug bounty leaderboards.

The Eclectic Origins of Anti-Security

In the early 1990s, prominent computer scientists and industry professionals spoke out against the idea of hiring hackers, arguing that it was like hiring burglars as bank guards, or arsons as fire marshals. Many hackers spent the better part of the 1990s fighting these perceptions.[60] The controversial “full disclosure movement” served as one crucial mechanism. Exemplified by the hacker-founded mailing list Bugtraq, full disclosure facilitated dialogue between members of the underground hacking scene and more mainstream technologists: university systems administrators, hobbyist and professional security researchers, and eventually even representatives of the companies whose products were being hacked. Participants openly shared knowledge of vulnerabilities and, often, even functioning exploit code. They developed a conception of hacking that was less tied to autonomy and freedom of access, and more oriented towards the discovery and documentation of security vulnerabilities as an intellectual and ultimately commercial pursuit. Meanwhile, this research enabled the exploitation of vulnerabilities in a “proof of concept” mode to shame vendors like Microsoft, shifting the burden of insecurity away from the hackers themselves and onto the negligence of corporations.

By the late 1990s, many organizations recognized the hacker underground as an incubator for computer security expertise. Casting aside earlier anxieties, they sought to hire from the underground. Hackers became military consultants, penetration testers on auditing teams, tool developers, and in-house experts at companies that were beginning to take security seriously after a half-decade of hacker-initiated bad press.

In 1999, security researcher Marcus J. Ranum articulated a new, structural reason not to hire experts from the underground: what might it mean for a burgeoning security industry to reward people whose prior activity (on “the dark side”) was a chief reason for the security industry to exist in the first place? [61] What sort of perverse incentives might this create?[62]

“Instead of just having the ‘bad guys’ trying to find and exploit holes in systems, now we have the ‘good guys’ doing it too, or hiring ‘ex-bad guys,’ repackaging them as ‘good guys,’ and selling them to you for $400 an hour,” he argued in a 1999 special security-themed issue of Usenix’s ;login: magazine. “When you read about a shocking new vulnerability found in something, research not only the vulnerability but the individual or organization that announced it. Ask yourself if they happen to sell a solution to the problem, and keep your skepticism in gear.”[63]

Ranum soon found some strange bedfellows: a number of influential figures in the hacker underground began to amplify and adapt his message under the banner of the “Anti-Security Movement” (or “antisec,” for short). In early 2001, the Iceland-based hacking group security.is established a webpage for the movement at anti.security.is.[64] A links page featured Ranum’s talks and articles alongside the websites of supporting organizations, including the esteemed underground hacker group Association de Malfaiteurs (ADM).

The site’s central manifesto called for an immediate end to the public, full disclosure of computer vulnerabilities. However, this anti-disclosure policy left a lot of room for debate and disagreement. Contrary to what anti-security might suggest, some participants argued anti-disclosure would serve as a net positive for the security of the internet. As the anonymous author of the manifesto put it:

A digital holocaust occurs each time an exploit appears on Bugtraq, and kids across the world download it and target unprepared system administrators. Quite frankly, the integrity of systems worldwide will be ensured to a much greater extent when exploits are kept private, and not published.[65]

Figure 2. Two promotional images from the “Gallery” section of the anti.security.is website.

Many observers also noted a less altruistic motive for ending full disclosure: the hardcore underground researchers could continue to exploit the vulnerabilities they uncovered for their own use.[66]

It quickly became clear that anti-disclosure could serve a range of other agendas, too. The site hosted a growing collection of often-incommensurate statements, alternate manifestos, and FAQs from supporters, and participants debated with one another on the site’s message board.

Many critiqued the way full disclosure empowered the security industry’s growth, along two dimensions. First, they argued that disclosure armed unskilled, wannabe-hacker “script kiddies” with exploits that would then be used for website defacements and other attention-grabbing stunts—thus justifying the criminalization of hacking and enhancing security industry sales pitches. Second, they argued that the employment prospects enabled by the security industry’s growth incentivized hackers to disclose or sell underground knowledge as a form of resume building—effectively diminishing the power of the underground. As one participant broke it down:

…commercial security services rely on the presence of full disclosure mechanisms to feed script kids in order for them [script kids] to reduce real world security and thus increase their [security industry] sales activity and raise their profits. While that happens, the underground takes the hard shot: hackers are tracked down, bugs are patched, backdoors are discovered, etc.[67]

Some rationalized anti-security as a way to maintain the possibility of meaningful resistance to corporate or state overreach. As one contributor put it:

Imagine a secure internet…

Content Filters, IDS [intrusion detection systems], censorship, law enforcement, …

i mean.. that’s what security is also about…

Is it really what you want? A secure internet would take away all your means to oppose… It would be the equivalent of a police state.[68]

But other participants were less roundly opposed to the prospect of getting paid to improve internet security. For some, the marketing of security services and anti-disclosure could co-exist. (Indeed, some members of ADM had founded a security firm called Qualys in 1999).[69] They criticized security industry excesses and the “pimping out” of “mediawhore” hackers. For these figures, vulnerability disclosure and professional hacking per se were not the problem.[70] Hewing closer to Ranum’s position, they instead expressed disgust with the growth of a profit-motivated, financialized security services industry that contributed to and benefited from the dissemination of fear, uncertainty, and doubt (“FUD”). Consider this entry from one of the site’s FAQs:

Q: Who profits from the infosec[71] war?

A: Security companies do (this is their line of employment). They need to use scare tactics to motivate more people and companies into thinking their services are not only desirable, but necessary. It’s simple Capitalism. These corporations make security popular and fashionable, and turn it into a consumer pastime. Why can’t they carry out their jobs with less glamour?[72]

Still others framed it as a labor or intellectual property issue. Some argued that the exploit code appearing on mailing lists like Bugtraq was often posted without the consent of its authors—sometimes by people passing themselves off as the original author to accrue credit. Some objected in a more general sense, suggesting that full disclosure was tantamount to giving away value. “By defending the virtues of fully disclosing vulnerabilities in public forums, one already contributes to the expansion of corporate empires, without receiving their fair share of the millions of dollars generated by the information technology industry.”[73]

And others gestured vaguely towards visions of “real world security” or “people’s security,” where the internet could be made more secure for regular people without an escalation of an “attack/defense context.”[74] The possibility of a public-interest approach to security would become a significant theme in subsequent discussions related to anti-security. But it struggled to secure attention in the midst of an escalating series of spectacular developments.

Making Mayhem of Anti-Security

In early summer 2002, the relatively civil discourse found on anti.security.is was overshadowed by a parallel development. A hacker crew calling itself ~el8 began disseminating a textfile announcing “pr0jekt MAYHeM” (Project Mayhem).[75] Promising to “br1ng an end to the security community,” the zine’s authors posted a list of “missions”—exhortations to pollute the discourse on prominent security forums with bogus disclosures, and to hack both “media lmrz” (attention-seeking, resume-building white hat hackers) and prominent computer security figureheads, such as Purdue University computer scientist Eugene Spafford.[76] Subsequent issues showcased trophies from these hacking escapades—bash histories, home directory contents, and even email exchanges—intermixed with entertaining and outrageous editorial commentary. In one inflammatory example, a controversial ADM member’s emails were leaked, suggesting he was avidly consulting for the US military.[77]

An associated group calling itself the Phrack High Council (PHC) appeared as well, targeting the esteemed underground journal Phrack for its purported complicity in the security industry’s growth.[78] As they expressed it in a pithy diagnostic: “EVERY TECHNIQUE THAT IS RELEASED IN PHRACK IS NOW REALIZED BY THE SECURITY INDUSTRY. THE SEC INDUSTRY NOW SPENDS TIME TO THWART THESE TECHNIQUES.”[79] PHC managed to wrest control of #phrack, a major node in the hacker IRC network on EFNET, away from the journal’s editors. They then disseminated the unfinished version of Phrack 59, complete with spurious additions which made it seem like the journal’s staff supported anti-security and were contrite about their supposed role in selling out the underground.

Others called for the boycott or detournement of DEF CON, the flagship annual Las Vegas-based hacker conference. They argued the conference’s founders had perverted it into nothing more than a side show for their recently-established big ticket industry event Black Hat Briefings—a way to give Black Hat attendees a glimpse of the hacker threat, and thus spread more industry-serving FUD. “You’re participating to [sic] a scam to sell security to corporate black hat attendees. Defcon should be paying you,” reads a line on an anonymous flyer, probably produced by a hacker known as “Gweeds.”[80]

This “hacker pimping” became a chief concern of Gweeds, who advanced the notion of a “Black Hat Bloc” as a more explicitly politicized correlate to Project Mayhem. Gweeds laid out his project at H2K2, the July 2002 edition of the Hackers on Planet Earth (HOPE) conference. His presentation, titled “Black Hat Bloc or How I Stopped Worrying About Corporations and Learned to Love the Hacker Class War,”[81] advanced an ardently anti-capitalist view of hacker security research. Gweeds contrasted a historical hacker conception of security, premised on open access and privacy, with the version of security he saw as at work in the security industry: “Security of their legitimacy to power, money, and public resources. The security of [corporation’s] machines, of [corporation’s] right to own the network that was built on public funds. The security to protect you from taking it back.” The audience applauded loudly as he called out prominent members of the hacker scene for facilitating this shift. “They’re making money, sure. But they’re also increasing the reach of the police state at the expense of fellow hackers who will go to jail because of these crimes.” Gweeds argued instead that the unabashedly black hat position should be valorized as a means to push back against the overreach of states and corporate entities, and secure the internet as a public good.

The talk was favorably reviewed by The Register, an influential tech publication. But the review itself attracted massive backlash.[82] Hackers who were moving into the security industry wrote in to the publication, undermining Gweeds’ points and accusing the publication of rewarding anti-social elements of the scene with undeserved attention.[83]

By August 2002, many awaited the drama guaranteed to unfold at DEF CON 10. A much-speculated-about individual or group known as “GOBBLES Security” was slated to speak. Though positioned outside both the antisec and security industry camps, they performatively pushed back on the respectability-seeking “white hat” trend in hacking. For instance, while GOBBLES discursively supported full disclosure, in early 2002 they hijacked the Anti-Security Movement website, mocking participant’s ostensibly anodyne motives by poking fun at the idea that skilled black hat hackers would just “sit on [their] warez” (i.e., engage in research solely as an intellectual pursuit).[84] Moreover, when they did engage in full disclosure it was typically served to deride or humiliate other researchers. In this way, GOBBLES tacitly aligned with many aspects of the anti-security position by performatively insisting on the continued viability of an unruly, non-commoditized form of hacker expertise.[85]

GOBBLES had become known for sophisticated advisories published to mailing lists like Full Disclosure, all wrapped in long-winded, tangential rhetoric that was coded in a cliched Eastern European ESL writing style punctuated with phoneticized turkey sounds. The schtick only made the unusual sophistication of GOBBLES’ exploits all the more perplexing. Fascinated by the phenom, respected security researchers offered to foot the bill for a GOBBLES representative to attend DEF CON, according to a WIRED article titled “Hacker Humbles Security Experts.”[86] Onlookers would make sport of attempting to figure out who, exactly, was behind the group for years to come.

The question of how some form of computer security could be pursued outside of the auspices of the security industry remained very much on the agenda. Speaking just before GOBBLES’ much hyped talk, security researcher Steve Manzuik (“hellNbak”) echoed some of the points Gweeds had made a month earlier during his presentation at H2K2.[87] Notably, Manzuik endorsed the idea of hackers engaging in security research and action to support non-profits and other civil society groups—even suggesting that proactive info-seeking hacking-and-leaking from companies like Enron would be a marked public good.

But the GOBBLES presentation, “The Wolves Among Us,” quickly marked a return to spectacle.[88] The GOBBLES representative, “Nwonknu,” was joined on stage by Stephen Watt and Silvio Cesare, hackers who had recently been employed with Qualys, the security firm founded by members of ADM. Watt, who penned some of the early Anti-Security Movement texts under the handle “jimjones,” presented himself at DEF CON as “The Unix Terrorist.” This name was drawn from the extensive, absurdist—and probably spurious—members roster found in the most recent edition of the ~el8 textfile.[89] The speakers proceeded with a sustained ironic tone, calling out white hat-type hackers in the room and passive-aggressively interrogating those who came up to the stage to defend themselves or attempt to dialogue. The talk later attained legendary status among resolutely underground hackers, and spurred on still more attempts in mailing lists, IRC channels, and conference hallways to figure out just what exactly was going on, who was GOBBLES, and whether the entire Anti-Security Movement was some strange form of proxy infight on the acceptable modes of professionalizing among those in ADM’s orbit.

The Rhetorical Escalation and Effectual Decline of Anti-Security Mayhem

All the while, Project Mayhem continued to roll on, with new missions and escalating rhetoric. The Anti-Security Movement’s playful calls to “Protect the wild-life—Save the bugs!” via anti-disclosure were twisted into calls to “Save a bug, kill a white hat!” PHC spokesperson “gayh1tler” began calling for a “white hat holocaust.”[90] In associated IRC channels, websites, and textfiles, casual racism, misogyny, and calls for violence became the rhetorical mode of the day.

Supporters trolled mailing lists devoted to full disclosure, pointing to the growing list of hacked white hats and security industry figureheads as evidence that the black hat scene possessed greater expertise than the people institutionally tasked with promoting security.[91] Further evidence, they argued, of the “snake oil” being pitched by the blossoming security industry. “Who are the scriptkids now? You’re outgunned and outclassed. Take a nap and retire, you pathetic leeches.”[92]

Perhaps remarkably, the unfolding drama had until now remained under the radar of the mainstream technology press. This changed August 13, 2002 when WIRED published an article entitled “White-Hat Hate Crimes on the Rise.” It described Project Mayhem as “a violent incarnation of the ‘anti-sec’ movement, a campaign to persuade hackers not to publish information about the security bugs they uncover.”[93] The performative excesses of Project Mayhem thus entirely eclipsed the critically reflexive strains that had, at least in part, motivated the broader movement.

Many observers roundly dismissed anti-security in moral terms. Some even mused that the whole event was a false flag designed to support the very security industry it ostensibly opposed. One onlooker speculated that “the entire shenanigan was designed to ensure job security for those who fear the economic trends in IT employment.”[94] Another observed that “the fear PHC, ~el8 and such groups put into companies is actually helping sec.industry… This helps sell their service very well.”[95]

A small group of diehards kept Project Mayhem and PHC alive in one form or another over the next few years.[96] But much of their power to provoke was lost. Indeed, even the prospect of hacking white hats lost its edge as it transformed into an odd honorific; so many skilled hackers had been compromised in one way or another that being targeted had almost become a credential, a badge of significance and expertise.

Looking back on these events in a Phrack “prophile” a year later, in 2003, security.is member “Digit” admitted a cynical motive for his involvement in the Anti-Security Movement:

the true reasons behind antisec were not to create some greater security in the world or something like that which was mentioned in the FAQ and we took a lot of crap for. It was to keep security research where it belongs, with those that actually did it and at most a small tight knit group. That basically meant that people that found bugs, wrote exploits, and hacked wanted to keep their exploits/research private so that they had some nice private warez for some time ;>[97]

Whether all participants—across all the manifestations described above—were also cynically motivated is impossible to say.

Whatever the case, the notion of antisec, the specter of a “hacker class war,” and the general notion that the very meaning of “security” could itself be contested, remained significant for a global cast of resolutely underground writers publishing in Phrack and other venues for years afterwards. Some saw antisec as an inspiration,[98] while others saw it as a misguided endeavor that had only accelerated the underground’s demise.[99] Either way, the specter of antisec ultimately went on to serve as an important touchstone for a next generation of hacker activists—establishing a vision of an unruly hacker expertise operating outside the auspices of a security mainstream.

The Politics and Permanence of Anti-Security

We can consider the underground of this period through the lens of what Christopher Kelty has called a “recursive public”—a public defined by its commitment to maintaining and defending the integrity of the technological tools and infrastructure it requires to exist.[100] While Kelty explored the concept in relation to free and open source software communities, it applies just as well here. In this case, the hacker underground relied on both an active member base and continued—privileged—access to vulnerabilities as the condition of their existence. But it also ultimately benefitted from a sustaining, animating legend—a testament that the underground was not quite dead yet.

Anti-security demonstrated that not everyone who possessed real, practical hacking expertise were primarily out for a paycheck. Many were motivated by values and conceptions of “security” that diverged from those dominant in the corporate and national security arenas. Furthermore, it demonstrated that black hat hacking and the hacker underground could remain viable subcultural enterprises—viable, at least, for anyone willing to accept, look beyond, or attempt to supplant the flippantly macho and toxic dynamics on offer. While hackers continued to warn of the underground’s demise, texts generated by the Anti-Security Movement, ~el8, PHC, and the black hat bloc remained in circulation through website mirrors, textfile archives, and periodic porting-over to new web-based platforms. The specter of anti-security persisted, a touchstone frequently invoked by a next generation of hackers attempting to articulate, defend, or re-invigorate an underground sensibility and community both in their own textfiles and in publications like Phrack.

The lasting resonance of anti-security is most visible in the hacktivist endeavors that captured headlines throughout the 2010s. Most explicitly, in Anonymous” 2011 “Operation Antisec,” which took aim at what participants called the “security intelligence complex”—an updated correlate to the “security industry” of yesteryear. Indeed, some of the targeted companies employed “white hat” hackers known in the days of anti-security 1.0. A few years later, a hacker (or group of hackers) known as “Phineas Fisher” began targeting technology companies they held responsible for the suppression of political activism across the globe, including Hacking Team, as discussed in the introduction. In 2016, Fisher exfiltrated data from the union of the Catalonian police force, before turning their attention to the Turkish Justice and Development Party in solidarity with Rojava and Bakur, two anti-capitalist autonomous regions in Kurdistan.[101]

In April 2016, Fisher disseminated a “DIY guide” to encourage others—prominently featuring a urinating ascii art character first found in an ~el8 textfile some 14 years earlier, with “#antisec” printed underneath.[102] In 2019, they appropriated the tactics of what they called the “infosec industry,” announcing a “Hacktivist Bug Hunting Program” that aimed to incentivize hackers to secure “material of public interest” from banks, private prison operators, and other targets with the promise of monetary reward. As Fisher explained it, “this program is my attempt to make it possible for good hackers to earn an honest living uncovering material in the public interest, rather than having to sell their labor to the cybersecurity, cybercrime, or cyberwar industries” [translated from the original Spanish].[103]

Figure 5. Ascii art from ~el8 issue 3 from August 2002 (top) and ascii art from Phineas Fisher’s “hack back” DIY guide from April 2016 (bottom).

Fisher and likeminded hackers thus continue the tendencies of the earlier anti-security epoch: the spectacular, irreverent hack-and-leak logic exemplified by Project Mayhem, the unruly expertise typified by figures like GOBBLES, and the nascent envisioning of a public-interest mode of hacking. Only now, as mentioned in the introduction, their activities often align with the work of non-profit organizations like Citizen Lab—whose founder, Ron Deibert, has called for a “human-centric approach to cybersecurity.”[104] Indeed, Citizen Lab’s investigations often hinge on the same cast of actors, demonstrating how the tools produced by companies like Hacking Team, Gamma Group, and NSO Group are used to target journalists, activists, and dissidents around the globe.[105]

From this perspective, it is possible to imagine backwards what some of the original anti-security supporters may have been imagining forward in their visions of “people’s security” delivered by a “black hat bloc” of unruly computer security experts in opposition to a corporatized, militaristic security industry. Or at least, we can recognize that the inchoate rhetoric of anti-security has since inspired such imaginings in others.

“Hacking The Hackers: When Bug Bounty Programs Are the Target.” by Ryan Ellis, Northeastern University

In the past decade, bug bounty programs have become a common way to manage the identification and reporting of previously unknown and undisclosed software flaws.[106] Bounty programs, in their most simple form, pay hackers who find and disclose new bugs.[107] These programs have become a familiar security tool: Google, Facebook, the Department of Defense, Tesla, the retailer Lululemon, and hundreds of others now rely on bug bounties to help improve their security.[108] Bounties provide a way to organize hacker expertise and labor: enrolling them in a market that pays for the successful uncovering and reporting a new flaw.[109]

In a recent report, Bounty Everything: Hackers and the Making of the Global Bug Market Place,[110] Yuan Stevens and I show how the bounty model of crowdsourced security creates new hazards for the hackers/workers who participate in bounty programs. While bounty programs offer a number of possible benefits, they also mimic other forms of gig work and saddle precarious workers with new risks.

In this short essay, I further argue that bounty programs serve as targets—sites of potential malicious exploitation and attack—that create previously unacknowledged risks. In reconceiving bounty programs as targets, I draw on recent publicly reported incidents that (1) provide a window into the security of bug bounty programs and platforms, and (2) demonstrate the perceived value of coopting or repurposing key elements of exploits and attacks. Ultimately, this reframing calls for prioritizing the security of bounty programs.

Hacking the Hackers: Subverting Bounty Platforms

Bounty platforms organize and manage bounty programs on behalf of clients, and have been hacked in recent years. Documented incidents provide a window into bounty security and indicate the value that an adversary might find in subverting a bounty program.

In 2019, HackerOne, a bounty platform that hosts several hundred bounty programs and counts over one million registered hackers, reported an unauthorized breach.[111] A hacker working under the handle haxta4ok00 found an unauthorized way to access a HackerOne security analyst’s account (an expired session cookie was inadvertently disclosed during an earlier interaction).[112] The hacker reported the bug to HackerOne and the company resolved the problem by revoking the session cookie, thus removing the path to unauthorized access. Haxta4ok00 received a $20,000 bounty for reporting the issue.[113] The story attracted attention in the popular press. The irony was clear: a company devoted to harnessing the power of hackers for good had been hacked.[114]

Access to the session cookie indicated the potential value of bounty programs and platforms as a target. Gaining access to the HackerOne security analyst’s account would allow an attacker to access otherwise unknown and unfixed bugs across a selection of HackerOne’s programs.[115] HackerOne provides a range of services for the companies and organizations that have bounty programs on their platform, including review and triage of incoming reports.[116] This moderation is one of the core services that bounty platforms provide: public bounty programs regularly receive a flood of invalid reports. Triage service, whether outsourced to a bounty platform or performed by in-house staff, is essential to identifying new bugs within a steady stream of invalid or irrelevant submissions.[117] The hacker haxta4ok00 demonstrated how useful targeting a bounty program or platform might be. In accessing the employee account, they instantly gained visibility into bugs that had been submitted but not yet reviewed or remediated by the vulnerable host organization. HackerOne’s incident report was plain: with this somewhat simple flaw an intruder would have been able to access all of the programs and all of the reports associated with the analysts’ account; they would have been able to access both the metadata associated with the report and the contents of the report.[118]

While credential theft or spoofing provides one way to take advantage of the pool of unfixed bugs, insider threats present a similar risk. In June, 2022 HackerOne disclosed another security incident. An unidentified employee who worked in triage improperly leveraged their access to submitted bugs. Rather than pushing the bugs through the triage and mitigation pipeline, they attempted to steal bugs for personal gain.[119] They created a fake HackerOne account and submitted these bugs to a number of different bounty programs in the hopes of claiming payment for novel bugs.[120]

These two incidents are striking in just how ordinary they are. Credential theft enabled by weak security practices, and insiders accessing systems for unauthorized purposes, are not unique or surprising vectors of compromise. These sorts of incidents regularly happen across organizations. What makes these cases relevant or sobering is the potential impact that the subversion of a bounty platform might cause. Bounty programs, by their very nature, gather and host sensitive data that, if it fell into the wrong hands, would allow malicious actors to generate novel exploit and attacks. Identifying and developing novel attacks requires a specific type of expertise, including the discovery of vulnerabilities on endpoints that can be exploited. Rather than cultivating this expertise, some actors might find it easier or more advantageous to simply steal the capability. Indeed, as the next set of cases show, that is precisely what has happened.

Reuse/Recycle/Redeploy: Coopting Expertise

As Ben Buchanan observes in his book, The Hacker and the State: Cyber Attacks and the New Normal of Geopolitics, hacking is one of the central ways that states try to shape geopolitics.[121] The use of cyber operations to spy, disrupt, and destabilize requires the development of novel exploits and attacks and, by extension, the cultivation of expertise.[122] This cultivation takes many different forms, including bureaucratic reorganization, formal investment in training and tool development, contracting with third-party proxies, and other techniques. Additionally, a number of recent cases point toward a different approach to cultivating expertise: co-option. As states race to compete and create new capabilities, the theft and redeployment of an adversary’s (or, as the case below suggests, an ally’s) capabilities is an attractive option. Rather than investing resources in developing native tools or components (such as finding a novel flaw that can be used as a basis for an exploit or attack), stealing another’s hard work is a useful shortcut.

Examples of co-opting are readily available. The United States reportedly “piggybacked” on South Korean capabilities to gain visibility into North Korean computer networks during a period when its own access was otherwise limited.[123] Reports in Der Spiegel tied to documents leaked by former National Security Agency (NSA) contractor Edward Snowden, describe this hacking of hackers as a common practice christened as “fourth-party” collection.[124] Other examples of this approach—states (or other actors) hacking into other ongoing hacking attempts—point to the widespread prevalence of fourth-party hacking by other parties.[125] These accounts make it clear that the value of this approach is two-fold. In addition to gaining capabilities that were otherwise unavailable or out of reach, cooption provides an added layer of deniability or obfuscation.

Perhaps the most high-profile example of coopting is the case of ETERNALBLUE. In 2016, a group known as “the Shadow Brokers” claimed to have successfully pilfered a collection of secret NSA tools and documents. In time, they released these powerful capabilities, including ETERNALBLUE, a powerful exploit that targeted Windows machines. This tool was repurposed, first by malicious actors linked to North Korea as part of the ransomware attack known as “WannaCry” and then, a few months later by the Russian-backed destructive attack, “NotPetya.” While WannaCry was broadly disruptive, the damage associated with NotPetya was unprecedented, totaling an estimated $10 billion.[126] The most destructive cyberattack in history was, in part, a story of the effectiveness of repurposing or coopting expertise through theft.

Expertise is not only something to be developed; sometimes it can be stolen.

Conclusion: Bug Bounty Programs as Target

Reconsidering bounty programs as targets reveals an important point: the utility of bounty programs can be compromised by weak security practices. Bounty programs are designed and intended to improve security, but poor controls or protection of the bug pipeline can undermine any presumed or hoped-for security gains. Recasting bounty programs as targets highlights the importance of prioritizing security in handling the submission, review, and mitigation of bugs. Bugs are valuable and they should be protected like other valuable assets. Here, as in other domains, increasing security raises the costs for attackers.

There are, however, larger lessons as well. Bounty programs or other caches of unpatched bugs are always going to be attractive targets. Reducing their value is, however, possible. Developing well-resourced and integrated bounty programs can reduce “time-to-fix,” the period between submission and mitigation deployment. Ensuring that bugs are, in effect, soon-to-spoil goods reduces their value. For an attacker, the value of unknown and unpatched bugs starts to erode once mitigations are developed and deployed.[127] Capturing a collection of soon-to-be-fixed bugs is less valuable than capturing long-lasting and durable vulnerabilities. Shrinking the window between initial disclosure and mitigation requires not just quickly reviewing or validating a bug, it also rests on successfully integrating an organization’s bounty program with in-house staff that will develop and deploy the eventual fix.[128] Making sure an effective patch or update is available and adopted is vital to limiting the utility of a bug and shrinking the value of bounty programs as targets.

These simple solutions face an uncertain future. For the past decade, bounty programs have been adopted, in part, as a strategy for lowering the costs associated with security work.[129] The development of bounty platforms that mirror other forms of gig work rest on this basic premise.[130] These developments create cross-winds that can complicate matters. Bug bounty programs are increasingly run by bounty platforms. These companies are styled as lean platforms—they thrive by increasing scale, adding more bounty programs, more hackers, more submissions, while keeping costs and directly employed workers to a minimum.[131] This model might be difficult to reconcile with costly investments in security (as the above hacking of bounty platforms might hint). At the same time, the business model of bounty platforms requires signing up an increasing number of companies to offer bug bounty programs. Not all companies may be ready to respond to the rush of incoming reports.[132] “Time to fix” may well suffer as overwhelmed organizations sputter in the face of a flood of new bugs. In such instances, bug bounty programs can create new risks. This state of affairs is not a technical failing, and not an inevitability, but a question of how expertise is to be organized and valued.

“The Recursive, Geopolitical, and Infrastructural Expertise of Malware Analysis and Detection.”

by Andrew Dwyer, Royal Holloway, University of London

The computational device used to read this essay, and the network providing access to this forum, are both likely to be protected by an often-ignored infrastructure to analyse and detect malicious software. Most evident in anti-virus and endpoint detection technologies, an infrastructure is silently at work on the background of our digital devices, dependent on a largely hidden network of people and computers that span the globe.

In this essay, I argue that this infrastructure is dependent on the recursive folding of the expertise of people who analyse and write detections for malware—malware analysts—alongside the exploitation of greater computer automation and reasoning. Endpoint detection vendors generate detections by engaging in iterative feedback loops and recursive practices to limit and contour the cyber operations of states and cyber-criminals as much as the infrastructure itself is exploited for geopolitical advantage by states. The capacity to shape geopolitical action is dependent on, and sustained by, complex techno-human hybrids of expertise between malware analysts and computation, extending from an analyst’s hand-written detection to machine learning algorithms that construct new features to detect “suspicious” malware attributes.[133] Techno-human expertise is likewise facilitated by the sharing and analysis of big data as well as novel organisational practices that embed recursive, non-linear feedback loops into an infrastructure of malware analysis and detection. Together, techno-human expertise and a recursive infrastructure support one another, enabling endpoint detection vendors to purportedly claim that they can identify suspicious activity quickly, pre-emptively, and at scale. Notwithstanding the extent to which this claim is true, without such a recursive infrastructure of malware detection, the Internet and today’s geopolitical landscape, would look considerably—if not radically—different.

The geopolitical importance of the combination of a recursive malware analysis and detection infrastructure and techno-human expertise is made most explicit in the removal of the Moscow-based endpoint detection vendor Kaspersky from government networks in various countries. In 2015, Israeli intelligence operators who were exploiting Kaspersky’s computer network discovered a trove of hacking tools from the US National Security Agency (NSA). The operatives tipped off their counterparts at the NSA, who concluded that the Russian government had used Kaspersky’s infrastructure to gain access to those tools. As first reported in the New York Times in 2017, this resulted in Kaspersky and its infrastructure becoming a “Google search for sensitive information.”[134] In the same year, the United States had prohibited the federal government from using the services or products of the Russian-based endpoint detection provider, arguing that Kaspersky threatened the integrity and confidentiality of government information.[135] By 2022, the US Federal Communications Commission (FCC) had added Kaspersky to a list of firms that could not be paid by the FCC’s Universal Service Fund.[136] Similar calls and guidance to limit the use of Kaspersky have been made across Europe and elsewhere.[137]